openIDL Navigation

Page History

...

- DR - standardize on a SQL engine and store or more cleanly decouple the API from DB, more complicated but bespoke for each carrier - here is a paylod with what we need, standardizes whats avail in HDS, put it all on the carriers to do extract for api call payload OR standardize on an engine - in between solution is allow carrier to decide, API layer doing what it is doing now: passing sql payload, and if you choose to use DB prescribed by openIDL - but if a carrier wants to use another they can write their own inbetween - allow to move fast for this one

- KS - can we guarantee extraction is the same across carriers?

- DR - no less than today, if the HDS is the same across all carriers, intro risk EP written by carrier isn't accurate compared to EPs on nodes

- PA - current workflow the Stat Agent is writing EP, carrier consenting

- DR - writing same thing for mult engines harder - we standardize on engine, stand on API, most carrier just do that - in the corner case wherre comeone refuses or cannot they have option to do it another way and cert data is accurate

- DH - how work? with standard set of queries for stat reporting: fixed, known, unchanging yoy - in this case the EP is unique

- DR - standardize on the output, assume most carriers stand on whats made for them

- JB - ad hoc request relevant - efficiences, need ability to execute SQL, just b/c postfges doesn't mean postgres - create tables and views something a DBA may not want

- DR - an addition, "can we use mult engines?" not sure we can - need to use API extract layer, doesn't think we need to , more palatable to carrier, if rhey cant do data calls efficiently they can revert back to bespoke (their decision)

- DH - value goes away if we can do data calls efficiently

- DR - doesn't change how we interact, from exp there are carreirs who have to use X, if we support too many a problem too

- JB - analysis of what level of SQL is common/needed

- DR - not sure you will find most SQL engines that can use generic sql efectively

- KS - opp to discover

- DR - he is ok with this approach, but in resp to ? "lets expand db engines" - he doesn't think expanding is the way to go

- PA - use time to pivot to notes

- KS - 2 things

- can we defer this decision - doing it in Postgres, ID where there are postgres specific things, ways there is a smaller footprint, und what it looks like, create table structures, do it in postgres, best choice, maybe add in mariaDB, other things

- if we go down postgres, is there somethign we can articulate, or put into docs, "if you do this then..." have we thrown the baby out with the bath water asking people to write their own SQL

- DR - try to make too cloud agnostic, too many abstractions and compromises, go w/ postgres for now, not a bad thing if carriers have to write their own EPs rather than they dont join the platform

- PA - give DBA a postgres compliant EP, they could convert to another engine

- DR - and provide sample dataset to run both against

- KS - if allow them to write their own, give them a testset

- DR - preferred approach, go ahead with postgres, know how to handle this, dont entertain us maintaining us writing mult EPs for diff DBs

- PA - by halloween postgres working, test it against oracle stuff, will do analysis, syntax things, ID whats not compliant with others

- DR - James advocating this approach, looking at complexities - we are good, dont need to make decision now, dont believe if we have to expand access natively supporting EPs is the way to go, provide interface spec and allow carrier to cust as they see fit

- KS - keep efficiency and guarantee all are running the same thing

- DR - doesn't care about EP code, cares about the data model

- KS - diff perspective

- PA - PA HDS

- DR - votes for RDS or Aurora over Kubernetes

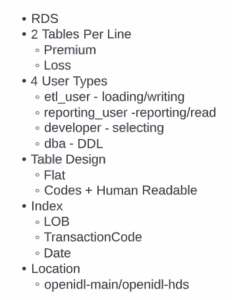

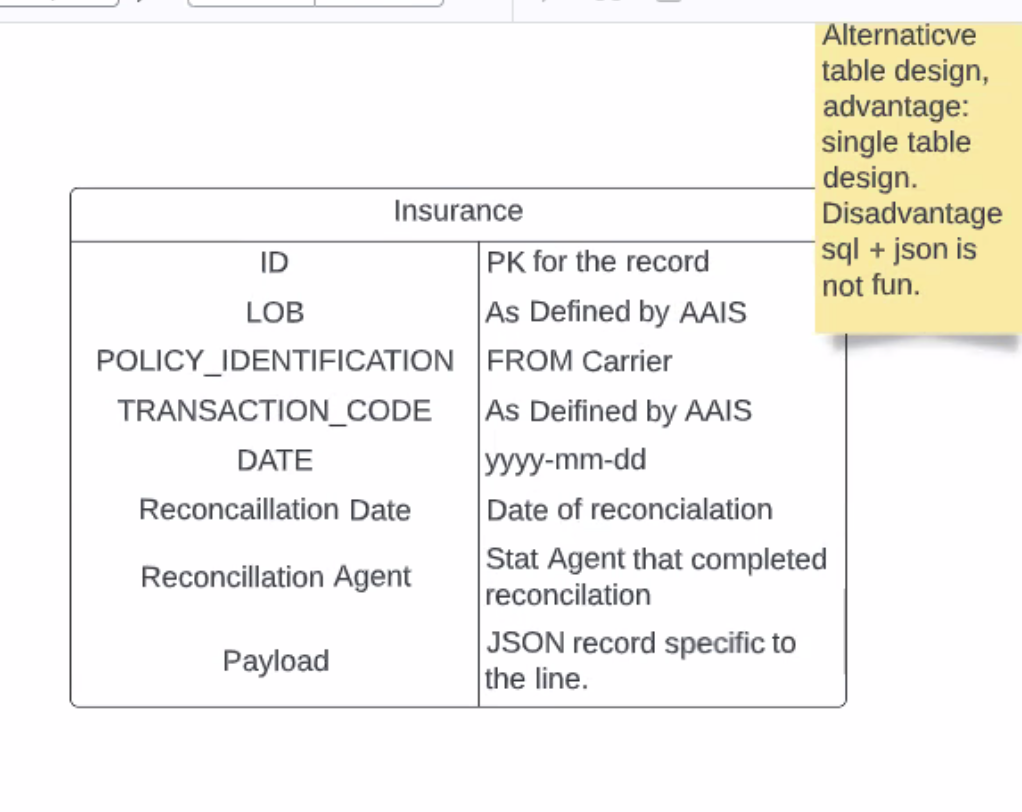

- PA - payload specific to record type as JSON, simpler for loading but more complex for Extract (Sql/JSON not fun) - 2 tables per line (1 prem 1 loss), index tables on LOB/Tracnsact code/date, all scripting goes to main/openidl-hds

- KS - why not just have 3 tables? bunch of overlap between the two, not saying should but working in there, prob 10 or so fields all the same

- PA - accounting date on both of them

- KS - largely denormalized, transactional, single coverage per transact, can be flat, but some overlap between the two

- PA - 2 diff objects and one object per table

- KS - take the common stuff and put it into a 3rd table

- DR - doesn't need to be very performant

- PA - seeing two tables as raw tables, idea of easy to access business level at a later date

- DR - makes sense

- DH - loss divided between outstanding (incurred) and paid - diff transactions - transacrt of paid and incurred total amt expect to pay on that claim

- PA - use views to see those? working on trying to find the most recent outstandings, view layer may be something to look into

- DR - if we use views on day one, may be complex and relied upon

- PA - working under KISS

- DR - views are great, make things easier, sometimes become relied upon

- DH incurred inculed paid while outstanding is "whats left" in a point in time

- KS - can't und suggestion - give diff data?

- DR - lots of use, horrible data warehouses impossible to understand

- PA - use an anon block and do it in a single sql

- KS - script or statement? script, postgres specific

- MZ - I joined this week, so sorry if I am asking repeat questions. In DevOps wiki documentation I saw GIt and versioning strategy. Are we also planning to use similar for data base change and versioning, like using Liquibase? Schema changes?

- KS - too early, single schema, not for POC

- DR - asking for lots of maint and specilaization w/in db, might be tougher, goes to last point, once get more complex then more complex versioning and deployment (accross carriers)

- PA - make sql statements independent of sql scripts, get to maturity we can automate to track DDLs

- KS - looking at stat plans dont get much schema migration, big ask for someone to ask to change schema

- PA - depends how you do it? if we add more columns to end of table wont break existing

- DR - not change is simple or easy - it is a change

- JB - manage over network

- DR - less complex you make it for use to adhere to the more adoption you will get - value to TRV is extracting complexity from them, closer you get the better adoption, more it is a chore to maintain the less adoption and more resistance

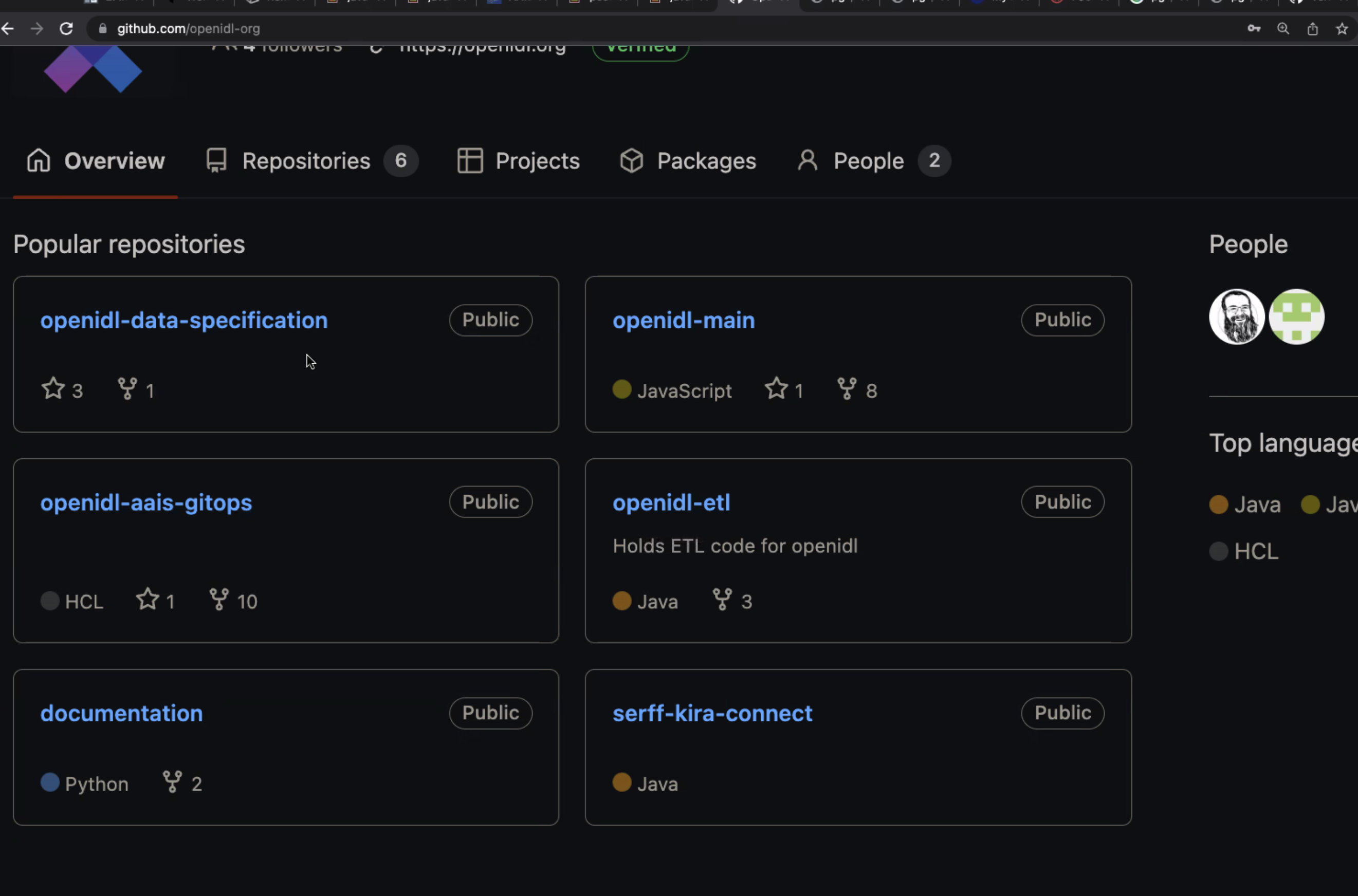

- PA - last bullet point, ok w/ location for scripting? make a new dir in openIDL-main

- bump into challenges and we will describe it

- KS - what should we be talkign about now ? structure of script? Peter? Talk about details of what David brought up (temp structures in postgres? multistep processing?)

- PA:

- PA - sub apps/lambda functions, new: openIDL-hds, worked with data engineer, produced table for prem records, 1 col per attribute, mostly varchars, mostly numerics - worked today on loading script, simple, not super fast, havent gotten into making the report yet, delay until we talk to James, brought up Friday, need to add col, unique ID to each record we receive - sequence or GUID? table until James is on the call, will faciliate testing - by next week will have loss and prem loaded, - https://github.com/openidl-org/openidl-main/tree/hds/openidl-hds

- PA - DH and PA looking at metrics and KPIs from data sets, good process

- KS - source data?

- PA - got a company closed, got records from 15 years ago, modified, encrypted and removed identifiers, 10k prem records sanitized and 5k loss records

- KS - source?

- PA - using coded messages - originnal stat plan formmated data, not edited, has been decoded

- DH -cREATED expected values based on the data provide, to test that we ult get it right

- KS - slightly diff than what we gave to DH

- KS - this is the HDS format?

- PA. - table create statement for premium record/auto: https://github.com/openidl-org/openidl-main/blob/hds/openidl-hds/AU_PREMIUM.sql

- KS - EP will run script against HDS to produce one carriers slice of the reslut that is the stat report, data from one carrier's perspective

- PA - earned and summed premiums,

- KS - col by col, needs to be a single script - haven't decided on managed postgres, etc. just that it IS Postgres

- KS - just postgres data source

- PA - once we start looking at how we deploy we look at how/what and then "how is the adapter going to connect", big points of decision

- PA - JS + SQL

- KS - not saying allowing JS to run as part of the extract, as a starting point

- PA - like Python, but chaincode...

- KS -chaincode is in GO, we dont touch, all the code for ui, etc. is in JS - not talking about Kubernetes at this point

- PA - for HDS, dont know enough about adapter or chaincode

- DR - dont think we need kub on carrier side, JS as a lang, py is preferable but dont need to, this is time to discuss "how do we anticipate that working": sql script, return data - what does that look like

- KS - if i were running inside AAIS direct, set up lambda with API gateway and that sit, not sure if it is right direction for this, huge decision, ok saying 'run as a lambda?"

- DR - think so, could also step further and say , the hard part is the SQL script, could extract to point where you say need API, need - api - people will take, lift/load and use it - how do we encapsulate that sql statement?

- KS - plaintext?

- JB - some form of protocol to say what request is to wrap that text, something to create table, sep from extraction, simple message/protocol

- DR - how much is predefined vs extensible - easiest way is dumb API, sends super simple encrypted sql statement, assumes prior knowl of what to expect? fine or need more extensible

- JB - some kind of header or content descriptor, not blindly execute

- DR - could make it more flexible or more hardcoded for lack of a better term - schema vs schemaless, well defined vs open ended

- KS - api results schema is json object or all these fields, assuming going in, one endpoint, having diff endpoint for ea use case wont scale well

- DR - quicker, but wont scale - other ? - is there a need to have a signature of this to verify against? executable code asked to be run - some check needed to check against "yes this is an API call with a query to execute how do I make sure this is the right query"

- DR = some mech by which you say "yes I have an API call and it is what we say it is", maybe built that into auth flow? payload is trusted? thinking what cybersec peopel would want - exec code that returns data - million ways we could do it - do we want somethign to verify the payload is unaltered - simplest a CRC checksum signature kind of thing - if you download my code this is the sig you should get - code wasnt tampered with or you did something goofy - notes it is "RIGHT" and makes sure all are running exact same code against db

- KS - trusting what they are asking is what should be run, high level

- DH - signature both ways? here is what we want you to run and here is what we ran?

- DR - outbound, assume it is all encrypted in transit, have some negotiated key exchange, know it came from us - some kind of handshake - nuance is in one direction there is a party executing code from an outside source, API off-flow, e

- FZ - if altering data, ID of sender and instructions are compatible with what you are sending, if ID and instruction not altering anyhting

- KS - James put forth it should not alter HDS, where we came up with second schema - need to know what that script is gonna do to run against the HDS a

- DR - quick easy check: here is what it is suppsed to do, here is the signature for the code that does X, verify

- JB - session based?

- DR - extra layer, when you run this checksum, code, hash, this is what you should see and if you dont see it something is wrong

- DH - how do we know code is not going to cause any harm to HDS or deleting records, etc.

- PA - compartmentalize the risk, doing reporting, specific users

- DR - down the road more important, running not only against the db, limiting connections

- PA - RDS has features, amount of risk isnt huge

- DR - not huge but also lightweight - hash and publish the hash before running, gives a sense of security and consistency everyone is running exact same code

- PA - signed payload like linux ISO, can pub hash, will have more insight next week, earned prem, small way to show what is req to get here, not too complex, help carriers going thru cyber audits

- KS - if one API vs mult schema oriented apis then generic schema

- DR - the other discussion, not all hash needs to be transmitted over the wire, pointers to docs that desc what it is, dont anticipate feature rich facility at the begininin

- KS - getting somehwere with actual script is the biggest in the near term

- DR - get something to show as soon as possible: API Call Made, Code Run, Results Returned - dont get bogged down day 1, things need to be there, can be handled by references - from carrier perspex that would be good, maybe info is pre-shared or published in advance to keep API logic down

- KS - if we can get James involved, would be good, talk about running the script and that process tomorrow (10/11), did start moving documentation on the wiki to make room for incoming documentation

Time | Item | Who | Notes |

|---|---|---|---|

Overview

Content Tools