openIDL Navigation

Page History

...

- PA - (Takes us through the work he has been doing with the test dataset https://github.com/openidl-org/openidl-main/blob/hds/openidl-hds/AU_PREMIUM.sql

- PA - Sequence or GUID

- KS - unique identifiers - who owns the ID? does HDS own it or do we expect outside of HDS to do stuff w/ it

- PA - primary key on record at transact level should never leave your area/priv account/pdc, makes sense to have that decentralized and controlled by HDS

- KS - no other outside systems dependent on it either - for debugging

- JM - benefits of GUID - scalability, ease vs scale, interesting question of how postgres chokes at scale? do we care? put it in a GUID have more parallelism than choking in sequence, load on this is slow and dont mind sequencing or parallelization you are done - if you have a GUID generator throws a 36 byte string guaranteed to be unique and run massively parallel processing - only a scalability question but does complicate things

- PA - misunderstood guid - not a guid for every single carrier but across individual HDS

- KS - do not want to throw out massive parallelism

- JM - GUIDs unique as well, will we ever put together for mult carriers? No, not at transaction level - can we use SEQUENCES on day 1 and keep GUID in mind if you need it

- PA - Milind put the command in to do the guid, can make that happen

- KS - thing you lose with guid is ease of understanding of the key, takes time to work with the guid, has pros and cons, a dev staring this down another thing they need to keep in mind, if using primarily to debug stuff, tend to use GUIds for stuff and uniqueness

- JM - not massively over engineered, make sure seed it correctly,

- MZ - GUID is better

- KS - nothing says must do sequence OR guid, if not hard to create, sequence is unique, guids might be. worth it

- PA - insert 1 vs insert many, doing parallel stuff will be key

- JM - test the hell out of it - no magic, big fancy hashing algo, depends on what you hash: server name, etc.

- PA - will do some work on it

- KS - adapter and API layer, sequence diagram and lost on a couple spots, why do we need a second schema, cant DB do things w/o destruction to the schema

- KS: Guiding forces

- transax processor runs a single sql script - create table-run query, etc.that can do mult steps w/o being destructive to HDS, having a second schema

- how do you get results back to processor

- sep schema is to appease policy reqs that not too much control over hds schema

- split schema for org considerations

- dont want higher power in HDS schema

- PA - stored proc (dead), maybe use anonymous block to have one sql script executed

- JM - talked about simple and ended in complex, the challenge is what gets back to EP, if you execute the sql script (fairly trivial to write big script) - issue is how to get input back to EP - make query as complicated as you want but only get one result back - ? - what if you have more than one table? at some point you need to get info back to EP - it is elegant to say only last query gets to return results - if you have one, fine but what if you have multiples?

- JB - merit in being to accomodate more than one table - argument keeping separate makes it easier to adopt openIDL in the future is a good one

- JM - 2 issues: a. schema separation (org govertnance and security concerns) - day 1 EP submits whole script and you get one table back - there is an interesting question in how to get it back

- KS - DR felt you don't need sep schema

- JM - technically DR is right, design constraint: big orgs nutty about db power, 2 schemas solves org problem, 1/2 dozen operations, set of scary rights DBs worry about results in log discussion

- KS - diff argument, project: make sure we can do something, maybe not MVP but run a single script that can do the stuff we need to do in a single script in HDS and it does work, now lets figure out will it pass muster in policy groups then go to second schema to solve policy issues

- JM - policy question not an empirical question - are DBA teams nutty about power

- KS - for H this is important, for T it didn't seem to be the same level of importance

- PA - for AAIS feels weird to give that level of power to raw tables

- JM - if we have good modular design should be fairly easy to add loops to the base (scripts), design day 2

- JM - ask we dev in highly modular fashion, compnentns have no external coupling, constraining ourselves by removing loop - you have 000s of queries in script, 2 classes

- 1 does something

- 1 returns results

- do there need to be two scripts?

- KS - into the weeds how you return results? populating table?

- JM - n number of queries and commands, lets assume EP in javascript you have DB connection/objects (run these commands), hey engine pls run this query - dml command, return code, run script that can return command, have populated memory struct in javascript, "execute query, return results"

- KS - script or sql command - running a sql script running commands, still able to return results out of script

- JM - dont think we should run a script - "what client am I pusing this through" - resp of EP to scan and submit each query to postgres engine

- KS - differing from yesterday - how do we get results from script? prob can't - jm doesn't want to run script

- JM - challenge is getting results back, run script is easy but if we do needs andother step, run script need to run a client, easy to tell client run script but challenge is getting results back

- PA - if i am runnign script as part of EP, some ID associated, can't i make a table in results schema I loaded up

- KS - need schema to see final table

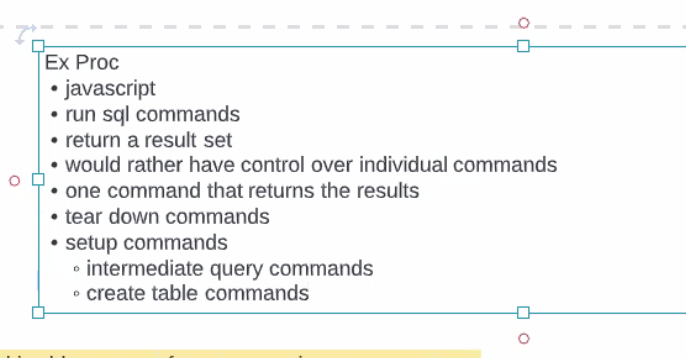

- JM - no you don't, if i submit EP and has 10 SQLs in it, and tell EP "run scriopt and loop through", runs 1-5, last query returns result set (might create tables, views, drop tables), EP needs some mech to identify which is the query to return result set - some set of commands to set up, set up table and breakdown scripts - design of object has any number of setup commands, singular query command (returns result set) and any number of teardown commands

- KS - back to a collection of commands

- MZ - just using sql commands? track versions?

- JM - currently just commands

- MZ - could have versioning for data definition changes

- KS - initial request might migrate over time

- JM - notion of data versioning is true, HDS has versioning, how do we pass script in, "queries compatible with x", design of scripts/queries is versioning, need ot make sure HDS is aware of version and EP is aware of version and can be compatible

- KS - one case of using collection of commands is just using, run them against postgres engine and last will be the result (one in the middle will be the result, one identified

- JM - mult query patterns at the same time... could get rid of looping entirely if we allow for query commands that return result sets if we interrogate them - any query that starts with "SELECT" returns a result yet - EP loops through all queries in script, hits "select" query

- KS - how do we pass the results of one command to return results

- JM - script writer has to do it, 3 setup commands (create tables, etc.) the EP runs each query doesn't know doesn't care - query writer has to know

- KS - how do you get results out

- JM - EP establishes session, run it, EP writers job to put commands

- PA - will deliver setup and result - three scripts: setup, result

- JM - getting rid of loop, any number of commands can return result sets, EPs obligation to interrogate those queries - if it starts with SELECT returns result - still loop in there thru the commands but super fine grained the loop, instead of looping at script level loop at the command level -

- JM - will look like a script, submit it to a client that submits it to the db, we are becoming the client, we need to loop, no magic, make basic rules (semicolon, blank lines, simple parsing)

- JB - comments in the script

- JM - do we put directives in?

- KS - directives nose under tent

- JB - fragments telling whats in next set

- JM - no command returns result set w/o being called SELECT

- JM - questions of pagination, etc. - if we agree we loop thru every query, when select we result set, MV1 dont return more than 10k rows

- PA - none of the reports we do early will have more than 10k rows

- JM - will have to deal with memory and pagination issues after Day 1 - hide attribution and fine grained info - intermediate should never leave db engine

- KS - if assuming says "select" and captures results - instead of allowing mult returns only last select statement?

- JM - day 1 = one table

- PA - all standard ones will be one table (there are non standards)

- JM - hard part of mult result sets, whats hard is 2-3-4 results sets how do you return via API, maybe we say API returns 3 huge chunks of JSON and the receiver figures it out

- KS - still stuck on SELECT returns result and others

- JM - only select statement returns results

- PA - for all the annual DOI stuff doing, in good position, need to talk to person at AAIS, lot of low hanging fruit with design

- JM - if only have select statement, MVP 1 run one select statement

- JB - flexibility from diff lines of business, combine and consolidate

- PA - do it all with intermediate temp tables

- JM - EOD 100 lines of JS, suprisingly tight, harder to understand than code

- KS - simple in JS,

- PA - other option, Extract would have name to it and fill table based on name, tear table down - result set in persisiten table noted by Extraction

- DH - perm table stored where?

- PA - your HDS

- JM - or the EP schema -

- DH - another thing to consider (maybe day 2) - companies will want to see results of queries run on their data

- JM - had that accounted for (test/eval or scanner validator)

Mon., Oct. 24, 2022

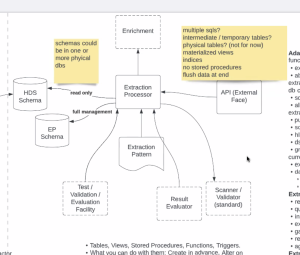

- KS - diagram shared last week - depiction of how work should flow. Some requirements embedded. (Document shared illustrating this).

- KS - high-level: bringing a script parsed into commands. Starts by quering HDS, can do intermediate tables for successive queries, can return results on any. Can ID queries as select statements. Parsing into collection of commands and executing those commands vis-a-vis 1-2 schemas. We will be walking through this today

- KS Queries against HDS - can we assume the first query we see goes against HDS and no others will?

- JM - no, but this isn't a problem - it's an asset

- KS - We have to ID which schema we're querying. JM: schema names are well defined.

- JM - we should prefix them with unusual enough names that it will forestall an issue, so that they can be used again and again. We should be highly prescriptive. Decision: openidl_base, openidl_ep. Select will identify the schema. JM: another aspect: the application user will have select authority against both, but only more advanced rights against EP.

- KS: how do we draw in sequence diagram? JM: open session doesn't actually go to schema. Sufficiently accurate to say you're creating tables in EP schema. When we get to the level of depth where any more doesn't give us add'l insights into requirements, we can stop there.

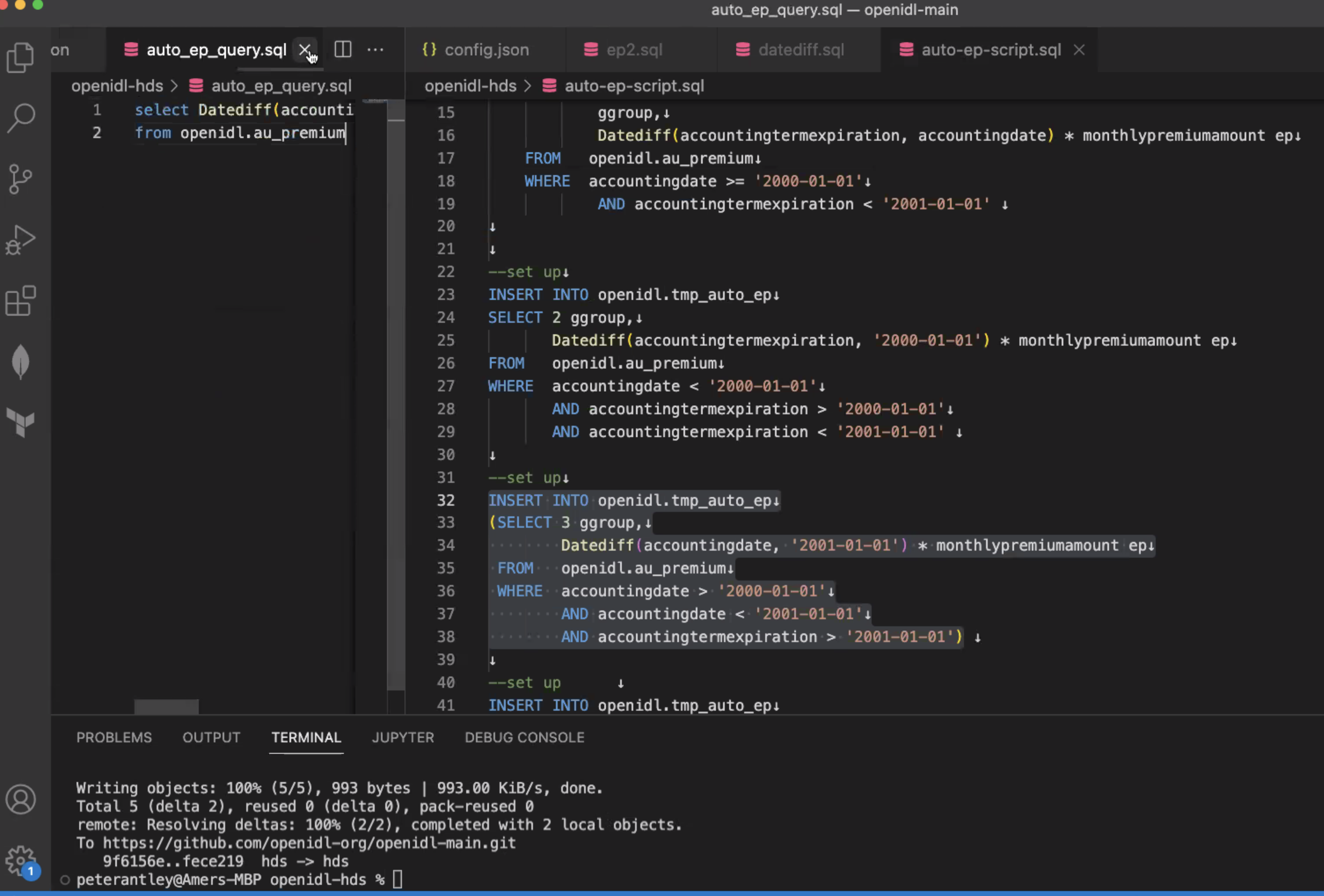

- KS: will take corresponding modifications offline. (PA asked KS to pull link to Github from chat, and PA shared screen)

- PA: in a perfect situation, this is all we need to produced earned premium:

- PA: Extraction for EP comes down to selecting sum from temp table. Teardown at end is drop of 1 table. 8 columns -EP is first column. Next column is EP by coverage - so breakdown will expand significantly over time.

- KS: current path is sufficiently robust to implement this. PA: this is actually a single select statement (KS pointed out not a single select to implement the whole thing).

- KS: Line 14 - this is a create table statement not a select statement. We have to recognize HDS request as a select statement. Break it up in the script

- JM: No, will be fine - tokenizing this, where each token = a query, we just show first token. Responsibility of the query writer to show two schemas in question.

- JM: All scripts assume default schema is openIDL_ep - we only specify schema for occasions that involve reaching out to base schema. We leave it blank. Because it is so common to reference EP schema

- JM: but in creating base schemas we want to specify schema name, absolutely. (KS: this happens outside of extraction execution. PA: but pivotal in setting up HDS).

- PA: we process records differently depending on when they fall in the year.

- JM: This demonstrates the case of being able to make intermediate tables. For people who have to read it comprehensibility is a must. PA: for EP - could make more sense to utilize a function and build it differently.

- JM: for scalability & modularity, the desire for functions explains the need for separation (HDS)

- PA: with a test set, we can establish a high confidence level that functions are working correctly.

- JM: single function business point for every business rule. Functions should be defined in either the base schema or EP schema but not borne into extraction patterns.

- JM: if we can put business logic into code modules, we will see repetition even within functions that need to be tabularized. This will put us in a highly scalable architecture/part of a scalable solution.

- JM: this is critical if we ask Mr. Antley and others to start coding

- JM: We also ask selves if functions should be built into base data? PA: can't do w/ETL time - until we know what our bounds are we can't calculate the results.

- JM: We should assume we want to use functions at this point. PA: functions should be part of bedrock infrastructure, not incorporated at query time, or SQL query will get so large that most people can't even read it.

- PA: with the power of functions, I can do one select statement and get results comparable to auto coverage report. Functions we're talking about right now will be created before extraction is run, + validated and tested

- KS: Disagreed because we have to govern those functions and install them. Doesn't believe this is the right approach - we want to keep it as simple as possible and just run the script

- PA: we have to do governance regardless, and not many functions made. e.g., 10 functions, tested, validated, and used repeatedly. The amount of testing done w/extraction patterns goes up exponentially without the use functions.

- KS: not challenging logic but timing. Doesn't believe we need to go there for the MVP. KS: More complicated parsing. PA: but we would set a delimiter.

- PA: we are likely taking about 9 functions. First is DateDiv (2 iso dates - difference btwn dates/months). 1 function for each column in that table.

- JM: if we can develop a set of functions that map cleanly to business requirements - lucid enough that any businessperson can read it, this represents a huge strength.

- KS: we've added a significant dependency on strong governance. Latest version at any time will be necessary for a user to run extraction patterns. Key consideration. (Potential drawback)

- JM: but no worse than the fact that we'll need to govern it anyway. Without functions, all logic has to fit inside extraction patterns. Then we have to add more and more to script, we could end up with massive extraction patterns. Massive prework to get one basic result. Ignores our ability to take advantage of the inevitable repetition within our codes. Also creates issues with versioning.

JB: Trying to put a higher-level overview on our discussion - impact of the adapter on our functionality. Presented diagram w/various endpoints/API. Other types of interactions in addition to basic extraction.

- Since we are positing notion of interface to a hosted node, function of knowing what's in the queue will need to be proxied out to some form of API.

- View Data Call request will ferret out specific request in the standing queue.

- Approving data call request - part of the validation process.

*****

JB: What I was describing is in idl adapter. Just looking at API.

JM: Who is the client of API? KS: as we're doing an extraction for a data call, we're interacting with this part to say "I consent.' Chain code says "I need to get data from carrier." Trigger in Kubernetes - an application called the extraction processor that lives in Kubernetes in the hosted node (a node on the network - has functions that interact with HL fabric).

JB: Consent has to come from carrier, can't come from hosted node?

KS: Disagreed with this statement. SAAS at this point. Permissions will be required for the purpose of security. This picture suggests a UI, user interacts with via permissions from the host.

JB: We shouldn't assume implementations of full function node - that if there's a dependency on getting access to this...

Time | Item | Who | Notes |

|---|---|---|---|